I continue to rely heavily on Bash as my go-to scripting language despite knowing I’d benefit greatly by going deep down the Python rabbit-hole. I started my journey in tech as a Linux sysadmin and have been an ardent fan of the Bash shell on Linux operating systems for quite some time now. Nine of out of ten times I can find a way to complete whatever scripting or automation task I have sitting in front of me using Bash and default utilities. Especially when it comes to parsing text. Utilities like awk, cut, sed, grep, etc. and Bash logic control structures are very powerful when combined.

While working on a little project this weekend I came to the realization that my single-threaded process was taking way too long to perform the 50,000+ tasks I had slated for it. After briefly looking at rewriting a Bash script in Python I realized I needed to do a little digging and see if there was some way to concurrently run various Bash scripting jobs. I’ve toyed round with backgrounding processes and using the wait command but have never been happy with the outcome.

So after some short search-fu, I ran across xargs and realized it can act as a process pool manager using the –max-proc flag. I was overjoyed and pissed all at the same time. How had I previously missed this?!?! We live and we learn, I suppose…

After testing a bunch of different scripting scenarios, I came to the realization that by exporting functions you can create a single Bash script to work through a pool of jobs via currently executing processes. Sweet!

Let’s begin digging into this scripting technique by looking at the basics of how to ask xargs to manage a basic pool of jobs.

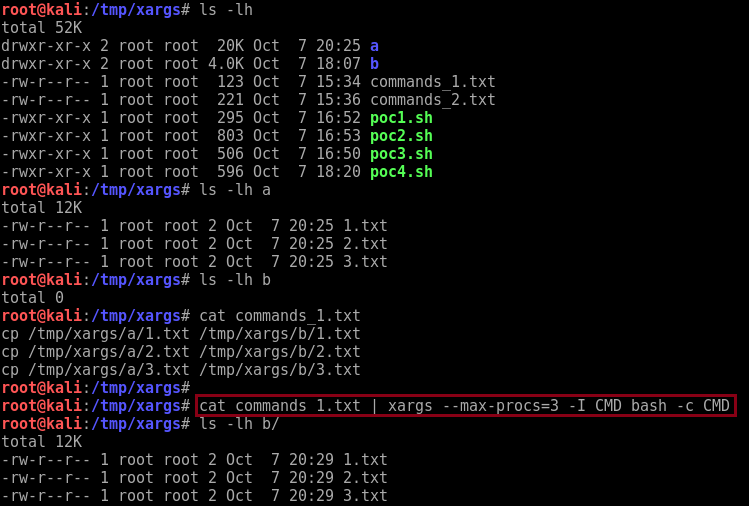

The following image depicts a scenario where I have three files in a directory named ‘a’ that I would like to copy to a directory named ‘b’. After placing the commands to perform the copies into a file I then pipe it into xargs to execute the commands concurrently.

Note the two command flags that are utilized.

-P max-procs, --max-procs=max-procs

Run up to max-procs processes at a time; the default is 1.

If max-procs is 0, xargs will run as many processes as

possible at a time. Use the -n option or the -L option

with -P; otherwise chances are that only one exec will be

done.

While xargs is running, you can send its process a SIGUSR1

signal to increase the number of commands to run

simultaneously, or a SIGUSR2 to decrease the number. You

cannot increase it above an implementation-defined limit

(which is shown with --show-limits). You cannot decrease

it below 1. xargs never terminates its commands; when

asked to decrease, it merely waits for more than one

existing command to terminate before starting another.

-I replace-str

Replace occurrences of replace-str in the initial-arguments

with names read from standard input. Also, unquoted blanks

do not terminate input items; instead the separator is the

newline character.

All this example is doing is pumping a file containing lines of commands through xargs and telling it to use the ‘CMD’ token as a means to feed the call to bash each line. Plain and simple.

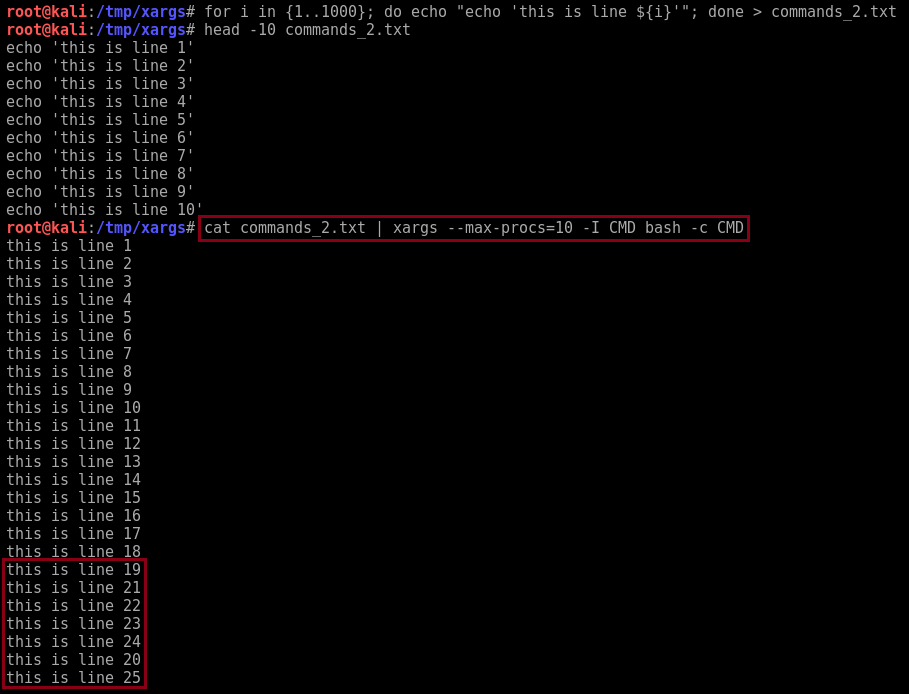

A second example to point out entails populating a file of commands with a large number of lines and then pumping it through xargs. Note how the line numbers aren’t produced in a perfect order once run.

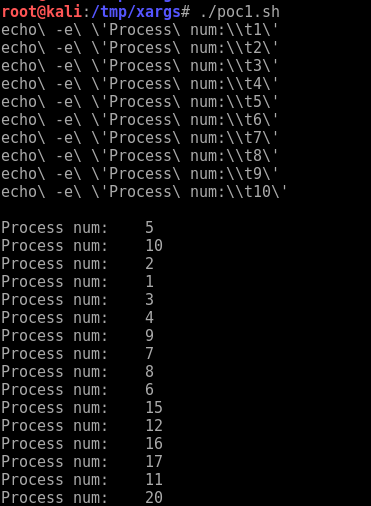

To highlight the concurrency a bit more, and to illustrate how you can add commands outside of the file you’re snarfing, I introduced a slight sleep into each call to bash that xargs is performing. I also introduced an escape character so I could utilize tabs in my output. Note the printf statement I utilized instead of the echo command. It’s much easier to deal with escaping input fed to xargs this way.

#!/bin/bash

function printCmds() {

for i in {1..5000}; do

#echo "echo -e 'Process num:\\\t${i}'"

printf "%q\n" "echo -e 'Process num:\t${i}'"

done

}

printCmds | head -10

echo

printCmds | xargs --max-procs=10 -I CMD bash -c 'usleep $(shuf -i 100000-300000 -n 1); CMD'

The resulting output from executing the script looks like the following. Notice how the calls to usleep affected the order.

Let’s now move on to using functions so we can group large number of commands together. This makes life much easier when trying to execute complex scripts as opposed to stacking commands on a single line to feed into bash.

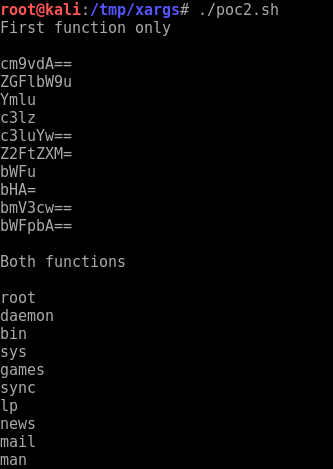

In the following example, I make use of two functions to illustrate how you can pump the output from one group of processes into another call to xargs. Make sure to export the functions you want xargs to use.

#!/bin/bash

function testFunction1() {

INPUT=$1

DO_SOMETHING=$(echo -n "${INPUT}" | base64 -w 0)

RETVAL=$?

[[ ${RETVAL} -eq 0 ]] && echo ${DO_SOMETHING} || echo "Error: Something fucky"

}

function testFunction2() {

INPUT=$1

DO_SOMETHING=$(echo -n "${INPUT}" | base64 -d)

RETVAL=$?

[[ ${RETVAL} -eq 0 ]] && echo ${DO_SOMETHING} || echo "Error: Something fucky"

}

# so xargs processes can use

export -f testFunction1

export -f testFunction2

echo "First function only"

echo

cut -d':' -f1 /etc/passwd | head -10 | \

xargs --max-procs=3 -I USERNAME bash -c 'testFunction1 USERNAME'

echo

echo "Both functions"

echo

cut -d':' -f1 /etc/passwd | head -10 | \

xargs --max-procs=3 -I USERNAME bash -c 'testFunction1 USERNAME' | \

xargs --max-procs=3 -I BASE64 bash -c 'testFunction2 BASE64'

The resulting output from executing the script looks like the following.

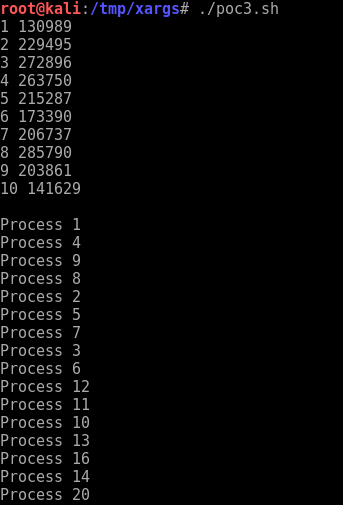

The next example features feeding two parameters into an exported function.

#!/bin/bash

function testFunction3() {

FIRST=$1

SECOND=$2

usleep ${SECOND}

echo "Process ${FIRST}"

}

# spits out lines like the following

# 1 150000

# 2 203000

# 3 298111

#

function printSequence () {

for i in {1..5000}; do

RAND=$(shuf -i 100000-300000 -n 1)

echo "${i} ${RAND}"

done

}

# so xargs processes can use

export -f testFunction3

printSequence | head -10

echo

printSequence | \

xargs --max-procs=10 -I ARGS bash -c 'testFunction3 ARGS'

Which produces output like the following.

Granted, if you ever want to pass multiple (or overly complex pieces of text) to a function you can always base64 encode your input and feed it to a function as a single parameter. I performed something similar in the testFunction1 and testFunction2 functions listed in one of the previous examples. The function testFunction2 receives base64 encoded input and decodes it prior to “doing something” with it.

For this last example I attempted to work out how to feed N lines at a time to xargs. I spent way too much time on this and ended up with something rather hackish. Meh… maybe it’ll be useful at some point in the future.

By default, xargs uses a newline character (‘\n’) to delimit what it views as a parameter. I used the ‘tr‘ command to swap out newlines with null characters. You can use null characters to your benefit when dealing with xargs. It can help with quotes and backslashes. Check out the man page info for this flag.

-0, --null

Input items are terminated by a null character instead of by

whitespace, and the quotes and backslash are not special

(every character is taken literally). Disables the end of

file string, which is treated like any other argument. Useful

when input items might contain white space, quote marks,

or backslashes. The GNU find -print0 option produces input

suitable for this mode.

Also note the positional parameters fed to bash.

#!/bin/bash

function testFunction4() {

FIRST=$1

SECOND=$2

echo "usleep ${SECOND}"

echo "echo \"Process ${FIRST}\""

}

# spits out lines like the following

# usleep 150000

# echo "Process 1"

# usleep 260700

# echo "Process 2"

# usleep 293002

# echo "Process 3"

#

function printSequence () {

for i in {1..5000}; do

RAND=$(shuf -i 100000-300000 -n 1)

testFunction4 ${i} ${RAND}

done

}

printSequence | head -10

echo

printSequence | tr '\n' '\0' | \

xargs --max-procs=10 -0 -L 2 bash -c '$0; $1'

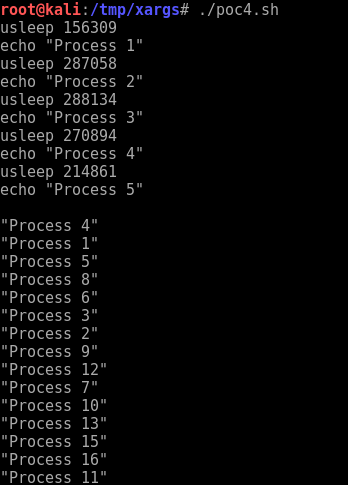

This example produces the following output.

And there you have it. You can now leverage concurrency using command-line Linux utilities and scripts. And with fewer lines of code than Python!